Expanse

Computing Without Boundaries

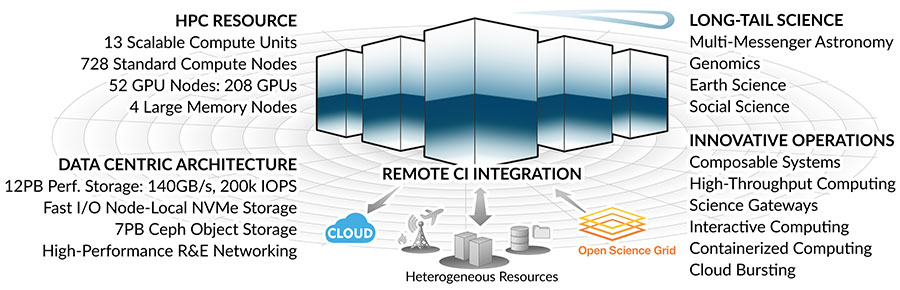

Expanse supports SDSC’s vision of “Computing without Boundaries” by increasing the capacity and performance for thousands of users of batch-oriented and science gateway computing, and by providing new capabilities that will enable research increasingly dependent upon heterogeneous and distributed resources composed into integrated and highly usable cyberinfrastructure.

| System | Performance | Key Features |

|---|---|---|

| Expanse | 5 Pflop/s peak; 93,184 CPU cores; 208 NVIDIA GPUs; 220 TB total DRAM; 810 TB total NVMe |

Standard Compute Nodes (728 total) GPU Nodes (52 total) Large-memory Nodes (4 total) Interconnect Storage Systems SDSC Scalable Compute Units (13 total) Entire system organized as 13 complete SSCUs, consisting of 56 standard nodes and four GPU nodes connected with 100 GB/s HDR InfiniBand |

Trial Accounts

Trial Accounts give users rapid access for the purpose of evaluating Expanse for their research. This can be a useful step in accessing the value of the system by allowing potential users to compile, run and do initial benchmarking of their application prior to submitting a larger Startup or Research allocation. Trial Accounts are for 1,000 core-hours, and requests are fulfilled within one (1) working day.