Annual Report

SDSC Annual Report 2024-2025

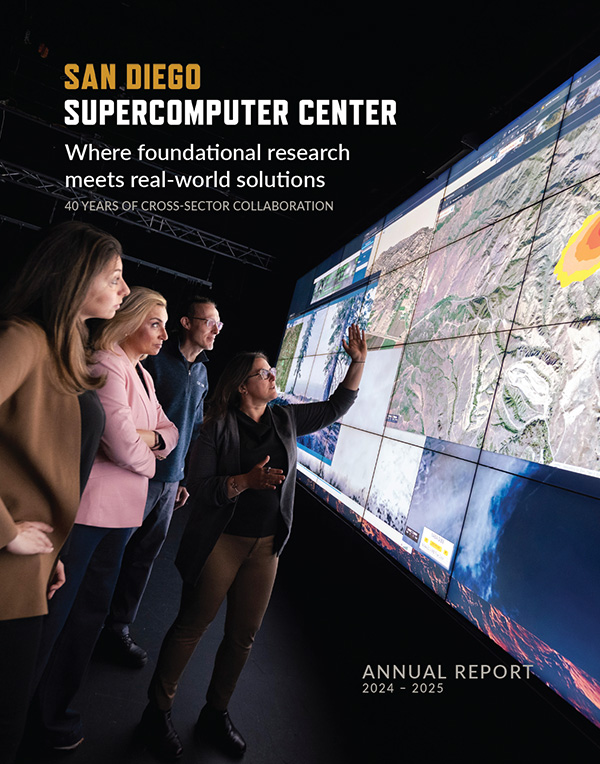

Where Foundational Research Meets Real-World Solutions

This publication highlights SDSC's accomplishments each year and ongoing leadership, with explorations in artificial intelligence; machine learning; cloud, edge and distributed high-throughput computing; and more.