Skip to navigation

Skip to navigation

Site Primary Navigation:

- About SDSC

- Services

- Support

- Research & Development

- Education & Training

- News & Events

Search The Site:

| TERSCALE HORIZONS | Contents | Next | |

Written in the Stars:

|

|

| PROJECT LEADER Peter H. Hauschildt, University of Georgia Edward Baron, University of Oklahoma France Allard, Centre de Recherche Astronomique de Lyon, France |

SAC TEAM Laura Nett-Carrington Stuart Johnson Jay Boisseau SDSC |

|

| "Spectroscopy has allowed us to discover the expansion of the universe and determine the composition of the Sun and stars," Hauschildt said. "In fact, studies of spectral lines provide our only direct information on the velocities and temperatures of objects thousands of light-years from us and reveal the signatures of the elements and molecules that comprise them. Unfortunately, this information can be extremely difficult to interpret, and the spectra of some objects contain millions of spectral lines that form under conditions that cannot be duplicated in a laboratory. We have to turn to computer simulations."

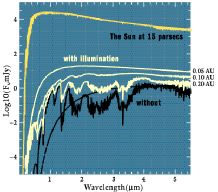

"And when we apply PHOENIX to supernovas, we actually get to 'see the insides' of the stars," Baron added. "This makes PHOENIX a very important tool for testing our theories of stellar evolution." (Figure 1) As astrophysical modeling packages go, PHOENIX is a jack-of-all-trades--it computes model atmospheres and synthetic spectra for Jovian planets (Figure 2), brown dwarfs, novas, supernovas, red and white dwarfs (Figure 3), solar-type stars, luminous red and blue giant stars, and even accretion disks in active galactic nuclei. It is appropriately complex; to calculate the absorption effects that produce millions of atomic and molecular spectral lines, the radiative transfer, nuclear and atomic physics, and hydrodynamic stellar and planetary atmosphere modeling requires more than 470,000 lines of code. Each spectrum calculation solves the radiative transfer equation for a large number of line transitions at many wavelength points, typically 150,000 to 500,000 across the optical spectrum from ultraviolet to near infrared. The processing requirements of these calculations are enormous. The huge quantities of data--about 600 million spectral lines, of which a given simulation might use 300 million--make certain parts of the simulation very I/O intensive, as well. |

Top| Contents | Next |

|

|

|

|

|

Figure 2. Hot JupitersIn 1995, a planet similar in size to Jupiter was discovered circling the solar-type star 51 Pegasi, in an orbit so close that the star must be heating the planet to near-incandescence. Since then, more than 20 "hot Jupiters" have been discovered. These infrared spectrum plots from PHOENIX depict possible energy profiles from hot Jupiters orbiting their stars at 1/20, 1/10, 1/5, and many times the Earth-Sun distance. The simulations assume that the planets are very young and retain a high effective temperature (1,000 Kelvin) from their formation in addition to being illuminated by their stars. |

|

|

|

| This provided the incentive for a SAC team at SDSC to provide assistance. Working with Hauschildt, Baron, and Canadian astrophysicist France Allard are Laura Nett-Carrington, Stuart Johnson, and Jay Boisseau, SDSC's associate director for Scientific Computing (and a Ph.D. astrophysicist himself).

To accommodate the large CPU and memory requirements of PHOENIX, Hauschildt and Baron had already developed a parallel version of the code using the Message Passing Interface (MPI) library, a versatile and portable solution for IBM SP, SGI, Cray, and other computers. The single-processor speed of a machine such as an IBM SP is fairly high to begin with; they found that putting even a small number of additional processors to work led to a significant speedup. "PHOENIX uses both task and data parallelism to optimize performance and allow larger model calculations," Hauschildt said. "Initial parallelization of the code was straightforward in that we distributed the computations among the different modules--this is called task parallelism--and we were further able to subdivide some of the modules by utilizing data parallelism--for example, independently working on individual spectral lines." "We'd been running PHOENIX on the Cray C90 at SDSC," Baron said. "We initially had high hopes for porting it to the T3E, but it turned out that there just isn't enough memory per node for PHOENIX to even compile it. It takes a much larger T3E, such as the one at NERSC [the National Energy Research Scientific Computing Center], to run it even somewhat successfully." The team then turned to the IBM SP systems at SDSC. Porting PHOENIX to Blue Horizon, Hauschildt is able to efficiently utilize all eight processors of each symmetric multi-processing (SMP) node rather than only four as with previous ports to IBM SP machines. This was accomplished by exploiting loop-level parallelism using OpenMP compiler directives. Going from four to eight CPUs per node boosted performance by a factor of 1.7 to 1.8. The researchers ran a simulation of the Sun's atmosphere and spectrum on 40 nodes--320 CPUs total with eight per SMP node--and achieved processing speedups of 160 to 360 times in various modules compared to a serial version of the code on a Power3 CPU. "Some routines scaled beautifully," Baron said. "In fact, opacity calculations on 320 processors ran at roughly 350 times the serial rate--that's better than linear scaling. The total speedup depends on the job and which particular modules are used." Hauschildt was quite enthusiastic about the resulting performance figures. "I am very impressed with these numbers," he said. "The SMP enhancement gives the code a nice boost above the MPI parallelization. This box needs less than 10 minutes per iteration for our solar model. We can now run a full-scale, hyper-sophisticated model of the Sun in a few hours, tops. It used to take a week. Incredible!" "We have run PHOENIX on 100 nodes with four MPI processors each, for 400 CPUs," Hauschildt said, "and in full-scale runs with the SMP enhancement, we'll use eight per node, so that will take us to 800 CPUs." The formal SAC project will continue until June 2000, but informal collaborations will continue into the indefinite future. "We know the code and the researchers, so it makes sense for us to sustain the great working relationship we have with each other," Nett-Carrington said. The continuing SAC project will include code profiling and SMP optimization, efforts already under way. In addition, the I/O-intensive nature of PHOENIX will allow SDSC to evaluate the performance of the IBM General Parallel File System (GPFS). "Our tests so far indicate that GPFS works very well indeed--roughly 10 times better than the old Parallel I/O File System," Hauschildt said. A 32-bit serial version of PHOENIX runs on Sun SPARC computers, and with the help of Sun Microsystems and their consultant Southwest Parallel Software, the code is being ported to the 64-bit, 64-CPU Sun HPC 10000 at SDSC. "The big Sun still has problems, but they're being worked out one by one," Hauschildt said. "Once this box works, it'll reduce the I/O to zero by using its vast memory directly." "The ability to run detailed PHOENIX calculations on Blue Horizon in a relatively short time will allow us to use Type II supernovas as cosmological probes into the distant universe," Baron said, "If we can accurately model a supernova explosion, we can determine its intrinsic brightness, and from that its distance. This will help astronomers measure the expansion of our universe and to determine whether the expansion actually is accelerating, as some claim, due to some previously unknown repulsive force." |

Top| Contents | Next |